Our Work on Generative AI Safety in 2023

May 12, 2023

IM scientists and engineers have worked on generative AI for many years, with a focus on AI safety and harm reduction. This post covers some of our recent research reports.

Last Updated: June 9, 2023

Generative AI is having a moment right now, thanks to the recent availability of tools like ChatGPT, Bard, Claude, etc.

Intuition Machines scientists and engineers have worked on generative AI research and applications for many years, so the popularity of these tools did not surprise us.

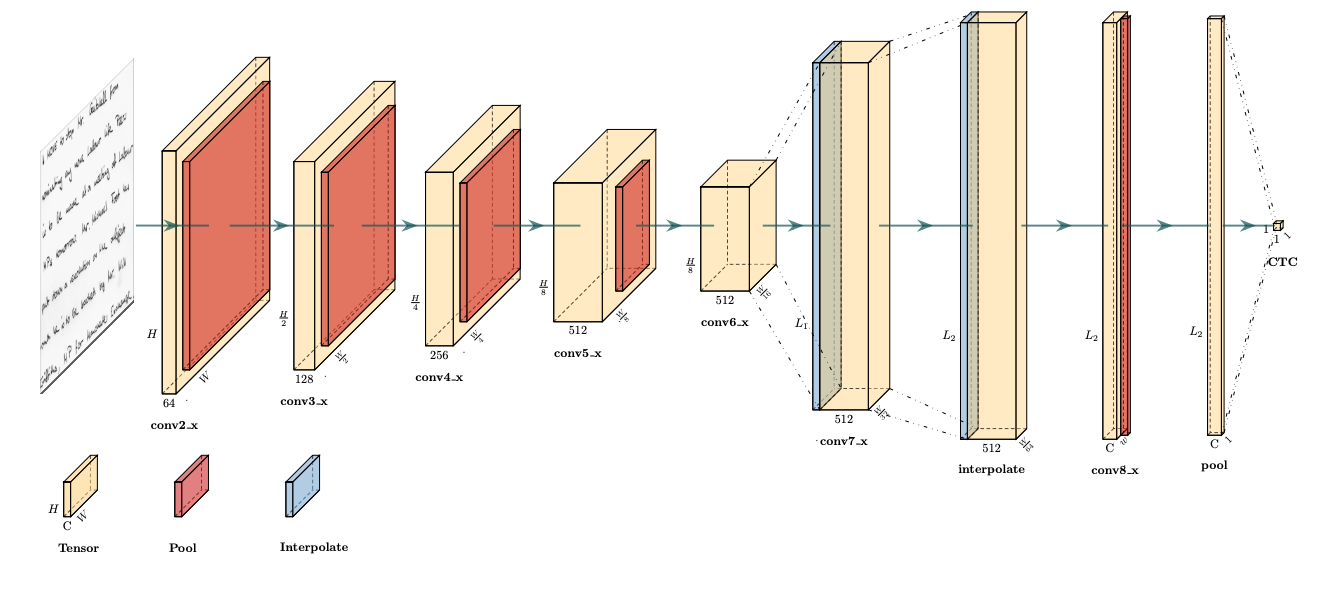

Consistent with our long-standing practice, the related work we have published at academic machine learning conferences focuses on visual applications.

However, we have noticed a large gap in the public perception of the capabilities of these models vs. the current reality. As part of our work on AI safety, our hCaptcha research team recently published several reports to demonstrate this via concrete examples.

These public reports only scratch the surface of our many years of research on these topics, and the results are already deeply embedded into many of our products and services.

We look forward to delivering our expertise in AI safety to help an ever-wider audience. The penetration of these technologies into our lives continues to increase, and our goal is to assist in giving everyone the benefits of ML while minimizing the inevitable abuse that comes with any new technology.

How Well Do AI Text Detectors Work?

Public awareness of generative AI's abuse potential is increasing, and a number of products now claim to offer LLM output detection through text analysis. Having worked on these topics for many years, we have found that naive approaches to this problem are extremely unreliable.

We used data from our recent report on generative AI abuse to test popular AI text detectors on confirmed LLM and human output from in-the-wild abuse samples. No public AI text detector we tested scored better than random chance. answers were LLM generated or assisted.

Generative AI is making some platforms useless

Large language models ("LLMs") can not yet replace human labor for many tasks, but what they can already do very well is convince some people that they are providing useful answers due to convincing hallucinations. When combined with questions in areas outside the expertise of a model user, this can often cause people to over-estimate the quality of LLM output.

We applied our state-of-the-art text detection approach to user answers, and found nearly 80% of bidders on a popular freelance work platform were using LLMs in sample jobs our researchers posted, and 100% of screening question answers were LLM generated or assisted.

Detecting Large Language Models

This report touches on some examples of our detection work in the field of generative AI, focusing on active challenge scenarios.

It is difficult to share information on this topic while balancing our consideration for the interests of our customers and their users, so we generally avoid publishing any details of specific detections, with a more spartan publication record than our many papers and conference reports in academic machine learning despite our large and talented research team focused in these areas.

However, here we gave a few specific examples of one of our simpler detection methodologies in order to make clear just how large the gap is between human and machine intelligence. The way people and machines analyze information is likely to remain fundamentally different for the foreseeable future, even when raw performance of machine intelligence on some tasks matches human capacities.

Image source: Wikimedia Commons, originalbennyc