Deep Learning Infrastructure at Scale: An Overview

February 25, 2019

A brief overview of how IM handles our ML infrastructure requirements was recently published by MLconf. Written by Tom Bishop, our Director of ML Research.

Tom Bishop, our Director of ML Research, recently published an article for MLconf on how IM manages our large-scale distributed systems and data requirements for ML.

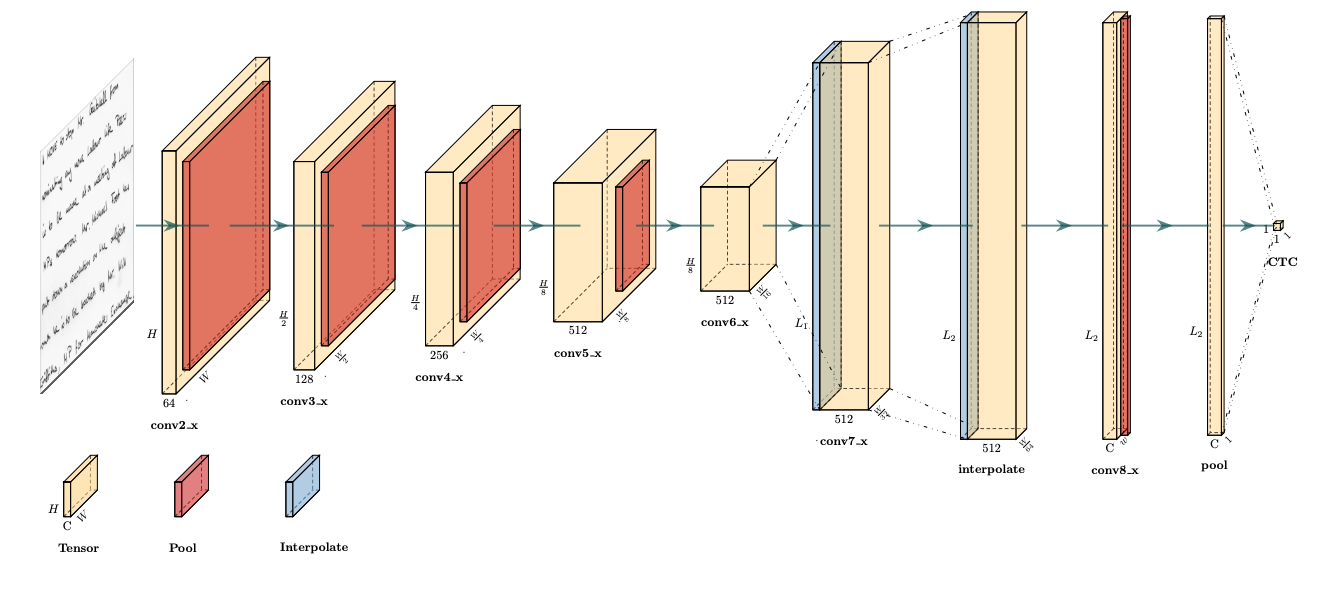

Many commercial applications of Deep Learning need to operate at large scale, typically in the form of serving deployed models to large numbers of customers. However inference is only half of the battle. The other side to this problem, which we will address here, is how to scale ML model building and research efforts when training datasets grow very large, and training must be done in a distributed fashion in the cloud. At Intuition Machines, we often need to deal with web-scale datasets of images and video, and as such having an efficient, scalable multi-user distributed training platform is essential.

The post encapsulates our recent review of the field, and is intended to serve as an introduction to the principles of training at scale along with a brief overview of the best available open source solutions for training your own networks in this manner.